How do you know how accurate your GPS is? This question once again came up when I started looking into a "plug and play" GPS. Unfortunately, there's no simple answer to this question, so there will be a few posts looking at different aspects.

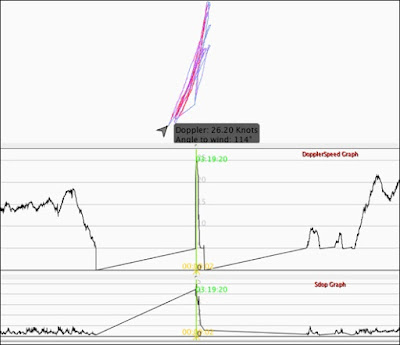

In search of the answer, the first thing to try is to use two GPS units right next to each other, and compare their speeds. Here is an example where I compared two Motion GPS units in a driving test:

The speeds from one unit are drawn in red, the speeds from the other unit in blue. A lot of times, the speeds are so close that only one color is visible. Here's a zoom-in:In the selected 10 second region (highlighted in yellow), the two GPS units gave almost identical speeds. For individual points, the speed differences were mostly below 0.1 knots; over the 10-second region, the average speeds were 13.482 and 13.486 knots. That's a rather small difference of 0.004 knots!But the graph shows that this is not always the case. If we look at the region to the right, the observed differences get much larger:

Here, one GPS reports a 10-second average speed of 19.926 knots, while the other GPS reports 19.664 knots - a difference of 0.262 knots. In the context of a speed competition like the GPS Team Challenge, that's a pretty large difference. One interesting thing to note is that the speeds some times deviate significantly for more than a second; the dip in the red track in the picture above, for example, is about 3 seconds long. This is non-random error: in the 10 Hz data for the graph above, the "dip" extends over almost 30 points, which we'd expect to happen once every billion points by chance. We'll get back to this when we look at estimating the likely error of speed results.The most straightforward way to compare different GPS units is to compare the results in different top speed categories. Here's a look at some of these, generated using the "Compare files" function in GPS Speedreader:

The table shows the speed results for the two devices, as well as the "two sigma" error estimates in the "+/-" column. The calculation of the result error estimates assumes that the errors are random, so that they would cancel each other out more and more when we look at more points: longer times or longer distances. This leads to higher error estimates for the 2 second results, roughly 2-fold lower estimates for the 10 second results, and very low estimates for the nautical mile (1852 m) and the 1 hour results. About 95 out of 100 times, we expect the "true" speed to be within the range defined by the +/- numbers. Most of the time, we expect the ranges of the two devices to overlap. Only about 1 in 10,000 results would be expect random errors to cause the results to be so different that the ranges do not overlap - which GPS Speedreader highlights by using a red background.

But the table shows that 5 of the 14 results are more different than expected. 5 out of 14, not one in ten thousand! There are three possible (and not mutually exclusive) explanations for this:

- One (or both) of the GPS units is not working correctly.

- The error in not random, so that the conclusions based on assuming random error are incorrect.

- The single-point error estimates that the GPS gives are too optimistic.

Let's have one more look at the data, and this time, include a graph of the single-point error estimates:

We can see that the point error estimates in the regions where the curves diverge are larger, which is a good sign. But do they increase enough? And is the difference that we see due to one GPS being "wrong", or do both of them disagree, and the truth lies somewhere in the middle? To answer these questions, we need more data.

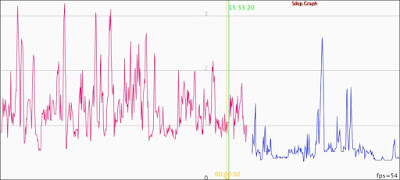

Fortunately, this was a driving test, so getting more data was easy: just put more GPS units on the dash board! So I added a few more: a couple of Locosys units (a GW-52 and a GW-60), a couple of u-blox prototypes that have been approved for use in the GPS Team challenge (a BN880 and a BN280, both Openlog-based), and my current u-blox Neo-M9/Openlog Artemis prototype. That makes a total of 7 GPS units: two with a Locosys Sirf-type GPS unit, and five with a u-blox based design. All units were set to log at 5 Hz for this drive. Here's an overview:

What is immediately apparent is that the error estimates differ a lot more than the speed estimates. In particular, the error estimates from the Locosys units, shown in purple and green, are much higher than the u-blox estimates, except when the car was stationary.Here is a zoom-in picture:

Most of the GPS units are pretty close together for most of the time, but several units stick out a bit by showing larger and more frequent deviations. But just like in the first and second picture in this post, the details vary quite a bit between different sections of the drive.

So we're back at the question: how can we quantify the error of each of the units, using all the information in the tracks, instead of limiting us to just a few top speeds?

Along the same lines, what can we learn about the point error estimates ("Speed Dilution of Precision", or SDoP, in Locosys parlance, and "speed accuracy", or sAcc, in u-blox parlance)?

I'll start looking at answers to these questions in my next post.